Before continuing, it's important to understand what the goal is with setting up a cluster with OviOS.

As a storage appliance, OviOS strives to achieve High Availability to the storage pools, and at the same time ensure no corruption occurs.

The OviOS Linux HA cluster concept:

* 2 or more OviOS Linux nodes.

* Backend storage that can be accessed by all OviOS nodes at the same time. These can be JBODs, shared RAW devices, iSCSI or FC LUNs and so on.

* One resource group (RG) that contains the VIP, Storage and SCSI Fence agent. They must be grouped to make sure they are always migrated together.

* The node on which the RG is running is the active node. This will provide access to Data (NFS, SMB, iSCSI) via the VIP. If the storage resource has started correctly, the pool(s) are imported on this node.

* Should this node fail, or the RG manually be moved to another node in the cluster, the storage resource, using the zfs-hac service, will export the pool from the node, the scsi fence agent will release the SCSI reservations, and the service will be imported on another pool, when the RG starts up.

* ZFS is not a clustered FileSystem, therefore accessing the pool from multiple nodes at the same time is not recommended and in the long term can lead to data corruption.

Setup:

3 OviOS Linux VMs created on a KVM Host.

Required are the OviOS Linux 3.02 iso image to install the new systems, and the OviOS upgrade bundles provided by the distributor.

Shared storage , in this case simulated by using an iSCSI LUN from an OpenSUSE VM.

1. Install OviOS Linux 3.02 on all 3 VMs.

After the installation completes, set the hostname on each node separately.

ovios-shell> version

Distributor ID: OviOS Linux

Description: Linux Storage OS

Release no: 20190821

Codename: Arcturus

Version no: 3.02

Kernel: 4.9.144-OVIOS

ovios-shell>

Distributor ID: OviOS Linux

Description: Linux Storage OS

Release no: 20190821

Codename: Arcturus

Version no: 3.02

Kernel: 4.9.144-OVIOS

ovios-shell>

ovios-shell> hostname ha1

Updated hostname to ha1.

ovios-shell>

Updated hostname to ha1.

ovios-shell>

ovios-shell> hostname ha2

Updated hostname to ha2.

ovios-shell>

Updated hostname to ha2.

ovios-shell>

ovios-shell> hostname ha3

Updated hostname to ha3.

ovios-shell>

Updated hostname to ha3.

ovios-shell>

2. upgrade the OS with the provided upgrade bundle:

- upgrade-ovios-4.14.99.tar.gz

and

- ovios-ha-cluster-upgrade-4.14.99.tar.gz.

Download both bundles on each host, and place them in the /root folder.

ha1:~ # pwd

/root

ha1:~ # ls -1

ovios-ha-cluster-upgrade-4.14.99.tar.gz

upgrade-ovios-4.14.99.tar.gz

ha1:~ #

/root

ha1:~ # ls -1

ovios-ha-cluster-upgrade-4.14.99.tar.gz

upgrade-ovios-4.14.99.tar.gz

ha1:~ #

Follow the instructions to upgrade. It is important to first upgrade the kernel the other packages in the first bundle (upgrade-ovios-4.14.99.tar.gz ) and reboot, after that upgrade the cluster packages from ovios-ha-cluster-upgrade-4.14.99.tar.gz.

Run these commands:

tar xvf upgrade-ovios-4.14.99.tar.gz -C /tmp/

cd /tmp/UPGRADE3.02/

mount /dev/sda1 /boot (OR whatever your boot device it)

./upgrade.sh /tmp/UPGRADE3.02/

- the upgrade script will ask for confirmation on multiple actions, confirm and press enter.

After the reboot, the kernel is at 4.14.99:

ovios-shell> version

Distributor ID: OviOS Linux

Description: Linux Storage OS

Release no: 20190821

Codename: Arcturus

Version no: 3.02

Kernel: 4.14.99-OVIOS

ovios-shell>

Distributor ID: OviOS Linux

Description: Linux Storage OS

Release no: 20190821

Codename: Arcturus

Version no: 3.02

Kernel: 4.14.99-OVIOS

ovios-shell>

Now we can upgrade the cluster. this is required because the new openssl version that we just installed has no backward compatibility.

tar xvf ovios-ha-cluster-upgrade-4.14.99.tar.gz -C /tmp/

cd /tmp/UPGRADE-CLUSTER3.02/

./upgrade-ovios-cluster.sh /tmp/UPGRADE-CLUSTER3.02

There is no need to reboot after this upgrade.

3. Setup the shared storage.

This will be an iscsi LUN in this case, provided by an OpenSUSE iSCSI target server.

OviOS comes equipped with open-iscsi to act as an initiator.

Simply run the following commands on each OviOS node:

ha3:~ # echo InitiatorName=`iscsi-iname` > /etc/iscsi/initiatorname.iscsi

ha3:~ # iscsiadm --mode discovery --op update --type sendtargets --portal 192.168.122.55 -l

192.168.122.55:3260,1 iqn.2019-09.com.ovios:ce67786e3214f0bf60bdLogging in to [iface: default, target: iqn.2019-09.com.ovios:ce67786e3214f0bf60bd, portal: 192.168.122.55,3260] (multiple)

Login to [iface: default, target: iqn.2019-09.com.ovios:ce67786e3214f0bf60bd, portal: 192.168.122.55,3260] successful.

where 192.168.122.55 is the IP of my iSCSI Target server.

Make sure the iSCSI Target is configured properly and doesn't require a login, if you want to use the same setup for this test.

The new disk will appear to OviOS as a regular Device that can be used for storage:

ovios-shell> storage

===========================================================

= Your root disk is /dev/sda2

= Do not use /dev/sda2 to create pools

= It will destroy your OviOS installation

===========================================================

===========================================================

= Use either Path, ID or Dev names to create Pools

===========================================================

ata-QEMU_DVD-ROM_QM00002 -> ../../sr0

ata-QEMU_HARDDISK_QM00001 -> ../../sda

scsi-360014058844bfefcf6c453494b3179c5 -> ../../sdb

wwn-0x60014058844bfefcf6c453494b3179c5 -> ../../sdb

ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0 -> ../../sdb

ovios-shell>

===========================================================

= Your root disk is /dev/sda2

= Do not use /dev/sda2 to create pools

= It will destroy your OviOS installation

===========================================================

===========================================================

= Use either Path, ID or Dev names to create Pools

===========================================================

ata-QEMU_DVD-ROM_QM00002 -> ../../sr0

ata-QEMU_HARDDISK_QM00001 -> ../../sda

scsi-360014058844bfefcf6c453494b3179c5 -> ../../sdb

wwn-0x60014058844bfefcf6c453494b3179c5 -> ../../sdb

ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0 -> ../../sdb

ovios-shell>

4. Setup the HA Cluster.

First, update the /etc/hosts file on each node in the cluster. This is a test case, so we won't have 2 networks configured, and no FQDNs.

Simply add the entries on each host at the end of the file.

127.0.0.1 localhost

# special IPv6 addresses

::1 localhost ipv6-localhost ipv6-loopback

fe00::0 ipv6-localnet

ff00::0 ipv6-mcastprefix

ff02::1 ipv6-allnodes

ff02::2 ipv6-allrouters

ff02::3 ipv6-allhosts

192.168.122.83 ha1

192.168.122.205 ha2

192.168.122.34 ha3

# special IPv6 addresses

::1 localhost ipv6-localhost ipv6-loopback

fe00::0 ipv6-localnet

ff00::0 ipv6-mcastprefix

ff02::1 ipv6-allnodes

ff02::2 ipv6-allrouters

ff02::3 ipv6-allhosts

192.168.122.83 ha1

192.168.122.205 ha2

192.168.122.34 ha3

4.1 Setup key based authentication.

First, enable root login and restart sshd on all OviOS nodes.

ovios-shell> options ssh.allow.root 1

Changing option: ssh.allow.root ==> on

ovios-shell> services restart sshd

* Stopping SSH Server... [ OK ]

* Starting SSH Server... [ OK ]

ovios-shell>

Changing option: ssh.allow.root ==> on

ovios-shell> services restart sshd

* Stopping SSH Server... [ OK ]

* Starting SSH Server... [ OK ]

ovios-shell>

On each OviOS node, run ssh-keygen (with your desired options to create keys) and then copy them over to the other hosts.

For ex on ha1 we have to copy the key's from ha2 and ha3.

ha1:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha2

ha1:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha3

On ha2:

ha2:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha1

ha2:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha3

On ha3:

ha3:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha1

ha3:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha2

ha3:~ # ssh-copy-id -i ~/.ssh/id_rsa.pub ha2

Now we can just login without a password between nodes, for example from ha1 to ha3

ha1:~ # ssh ha3

--------------------------------------

-------------OviOS Linux--------------

-A storage OS featuring zfs on Linux.-

--------------------------------------

Last login: Mon Sep 2 12:04:31 2019

ovios-shell>

--------------------------------------

-------------OviOS Linux--------------

-A storage OS featuring zfs on Linux.-

--------------------------------------

Last login: Mon Sep 2 12:04:31 2019

ovios-shell>

4.2 Setup the cluster

Enable the cluster option on every node:

ovios-shell> options cluster.enable 1

Changing option: cluster.enable ==> on

Changing option: autosync.cluster ==> on if not on already

Option autosync.cluster is already on

Changing option: skip.import ==> on if not on already

Option skip.import is already on

ovios-shell>

Changing option: cluster.enable ==> on

Changing option: autosync.cluster ==> on if not on already

Option autosync.cluster is already on

Changing option: skip.import ==> on if not on already

Option skip.import is already on

ovios-shell>

This will ensure autosync and skip.import will also be enabled.

On production environments you will need to setup a bonded interface with the same name on all OviOS nodes, but we'll skip this step for the test case, because we already have an identical interface (eth0) on all nodes.

Create the cluster configuration with pcs. This command must be run on all nodes in the cluster:

pcs cluster setup --local --name ovios-cluster ha1 ha2 ha3

Start the cluster only on one node for now, the node you want to use to setup the resources.

ha1:~ # cluster start

Starting the cluster...

Corosync is running.

Pacemaker is running.

ha1:~ #

Starting the cluster...

Corosync is running.

Pacemaker is running.

ha1:~ #

Now the cluster is up and running, we have to create the resources.

NOTE:

Before creating the resources, the storage pools should be created.

Use the "pool create" command to create your pools, and then the "zfs-admin stop" to export the pools. They will be then managed by the cluster.

Create the Cluster resources:

NOTE:

Before creating the resources, the storage pools should be created.

Use the "pool create" command to create your pools, and then the "zfs-admin stop" to export the pools. They will be then managed by the cluster.

Create the Cluster resources:

- a VIP, the storage service and the fence scsi service.

pcs resource create STORAGE lsb:zfs-hac is-managed=true op monitor interval=10s meta resource-stickiness=100

pcs resource create VIP ocf:heartbeat:IPaddr2 ip=192.168.122.33 cidr_netmask=24 nic=eth0 op monitor interval=15s meta resource-stickiness=100

pcs stonith create SCSI-RES fence_scsi devices="/dev/disk/by-path/ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0" pcmk_host_map="ha1:1;ha2:2;ha3:3" pcmk_host_list="ha1,ha2,ha3" plug="ha1 ha2 ha3" meta provides=unfencing resource-stickiness=100 power_wait=3 op monitor interval=2s

pcs resource create STORAGE lsb:zfs-hac is-managed=true op monitor interval=10s meta resource-stickiness=100

pcs resource create VIP ocf:heartbeat:IPaddr2 ip=192.168.122.33 cidr_netmask=24 nic=eth0 op monitor interval=15s meta resource-stickiness=100

pcs stonith create SCSI-RES fence_scsi devices="/dev/disk/by-path/ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0" pcmk_host_map="ha1:1;ha2:2;ha3:3" pcmk_host_list="ha1,ha2,ha3" plug="ha1 ha2 ha3" meta provides=unfencing resource-stickiness=100 power_wait=3 op monitor interval=2s

You will notice we use the devices option by-path (it must be one of the /dev/disk/by-uuid or by-path because these names are not changing).

pcs resource group add RG SCSI-RES STORAGE VIP

pcs resource group add RG SCSI-RES STORAGE VIP

We create a resource group called RG and add all resources to it to make sure they are always started on the same nodes.

pcs property set stonith-enabled=true

pcs property set no-quorum-policy=stop

pcs property set stonith-enabled=true

pcs property set no-quorum-policy=stop

The pcs official documentation should be consulted for more options.

Now, start the cluster on the other nodes.

# cluster start

Now, start the cluster on the other nodes.

# cluster start

After a few seconds, we have an up and running cluster with running resources.

Notice the IP and storage pools are both set on the same node.

Let's demonstrate an automatic failover.

- verify status

In this instance, everything runs on ha1.

Halt or panic ha1.

- the cluster will stop and look for another available node to move the resources:

A few seconds later all resources are started on ha2. This means the IP and storage pools are available and the clients accessing data from this cluster would have access to their data.

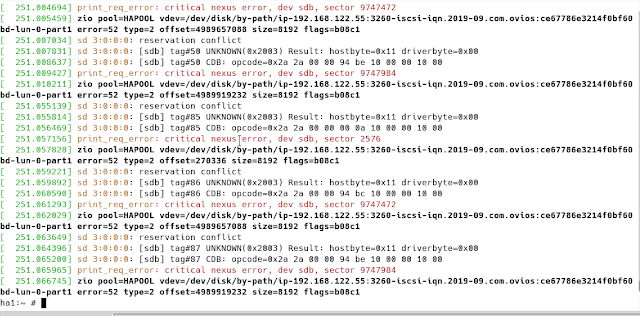

This screenshot shows how fencing works to protect your pools from being imported on different nodes at the same time. Notice if you want to force import a pool while it's started by the cluster on a different node, you will get reservation conflicts and the import won't work.

Note that the fencing agent uses the key from /var/run/cluster

It creates the /var/run/cluster directory and writes the files inside automatically.

ha3:~ # ls -l /var/run/cluster/

total 8

-rw-r----- 1 root root 96 Sep 2 12:27 fence_scsi.dev

-rw-r----- 1 root root 9 Sep 2 13:08 fence_scsi.key

ha3:~ #

ha3:~ # cat /var/run/cluster/fence_scsi.dev

/dev/disk/by-path/ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0

ha3:~ # cat /var/run/cluster/fence_scsi.key

62ed0002

ha3:~ #

The fence_scsi.dev is the same on all nodes.

the key is different.

ha1:~ # cat /var/run/cluster/fence_scsi.key

62ed0000

ha1:~ # cat /var/run/cluster/fence_scsi.dev

/dev/disk/by-path/ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0

ha1:~ #

and ha2:

ha2:~ # cat /var/run/cluster/fence_scsi.key

62ed0001

ha2:~ # cat /var/run/cluster/fence_scsi.dev

/dev/disk/by-path/ip-192.168.122.55:3260-iscsi-iqn.2019-09.com.ovios:ce67786e3214f0bf60bd-lun-0

ha2:~ #